Newsletter

Exclusive expert tips, customer stories and more.

One thing up front: Of course, there is no definitive answer to the question posed at the beginning. Too different are the areas of application and the goals that users want to achieve. Is it a intention of capturing large but mostly empty areas or long distances as cost-effectively as possible and merely recognising processes? Or is it important to obtain high-resolution recordings of all image areas and only be able to zoom into certain image areas if necessary? The following article attempts to shed light on the the topic while inviting a response and encouraging discussion.

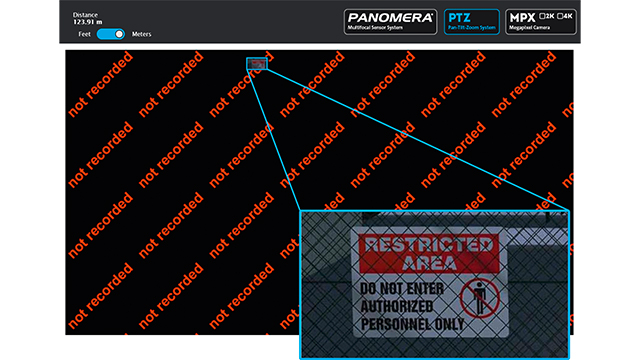

It is well known that the abbreviation “PTZ“ stands for “Pan“, “Tilt“ and “Zoom“. These three functions enable PTZ cameras to capture objects and people, and enlarge selected image portions for more accurate identification by optical zooming. PTZ cameras are mainly used in live video surveillance. They help to track processes in detail, thus enabling prompt intervention. However, events which take place outside of the area that makes up the current “PTZ focus“ go undetected due to technical limitations. This can be a problem precisely in areas where traffic is high. Moreover, one operator of each camera system can only view one detail view more closely at a time. In theory, therefore, complex situations need as many PTZ systems as there are incidents taking place, which of course is unrealistic. PTZ cameras are also not ideally suited to conducting analysis, as the image excerpt and resolution – that is to say the data quality – vary constantly. Furthermore, they are usually unable to adequately satisfy data protection requirements, even if techniques such as “privacy masking” are in place.

The situation is different with modern megapixel cameras. These cameras consistently reproduce the overall image, often in very good quality, and are effective in providing an overview of large expanses. However, the basic problem with the physics remains: Despite their sensor resolution, which in some cases is excellent, megapixel cameras will always represent the image background at substantially lower resolution than the foreground. But as described in DIN EN 62676-4, some application cases demand a certain minimum resolution over the entire area under observation.

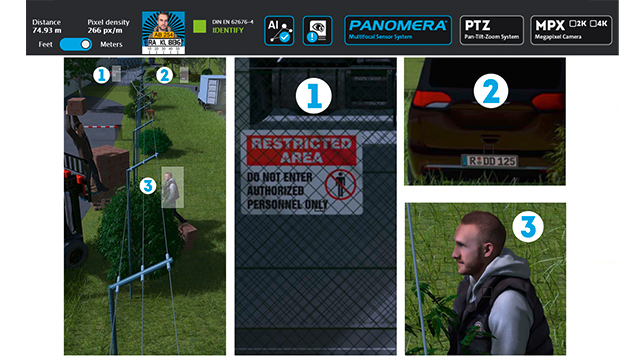

For example, images of faces must be captured in a resolution of at least 250 pixels per meter (px/m) in order to be successfully usable in court, whereas only 62.5 px/m is required for pure analysis of larger objects. So, megapixel cameras “waste“ the valuable “resolution density“ resource in the image foreground, while the same resource is lacking in the background. And this drawback is also not solved by extra systems or many much smaller cameras with different focal lengths. Attempts at integrating such systems efficiently with existing VMS systems entail immense amounts of additional work and are mostly doomed to failure. The same result can be expected when combining several large megapixel systems, and in this case the hardware and infrastructure costs quickly become unmanageable due to the “resolution overcompensation“ referred to previously, and bandwidth problems arise which can even push the limits of existing 1 Gbit/s networks. The same applies for planning, which would rapidly involve an entirely unacceptable work commitment.

The problem of “minimum resolution for high-performance analysis“ is also not solved satisfactorily with classic single-sensor cameras. According to the data analysis principle “Garbage in, garbage out“, the results from data processing systems can only be as good as the quality of the input data. In order to obtain good results from video analysis, the essential criterion for such data quality is to reach or extend a minimum image resolution for the respective analysis requirement (and ideally already definable during planning) in all areas of the observed space.

The drawbacks associated with the surveillance of large expanses as outlined above have prompted many integrators the resort to a combination of PTZ and single sensor cameras. One alternative is presented by “multi sensor systems“, in which a number of sensors and lenses are accommodated in one housing, usually at an angle of 180 or 360 degrees. However, even these systems cannot satisfactorily resolve the known disadvantages, such as low resolution in distant image areas, no high-resolution recording of the overall scene or data of insufficient quality to allow analysis over the entire area.

Finally, the patented multifocal sensor technology (MFS) offers the advantages of both PTZ and megapixel systems. To do this, it makes use of multiple lenses and sensors (“multi“), all of which have different focal lengths (“focal“), in one housing. The sensors are assigned to different areas in the image with different focal lengths. In this way, MFS systems are able to reproduce the image area in the middle distance and the background with the same, high minimum resolution density as scenes in the foreground. A powerful software stitches up to eight individual images to create one high-resolution overall image, and at the same time manages the calibration of the systems as well as time and image synchronisation. MFS systems can also be scaled and combined as desired and customers can operate several MFS cameras as a single camera system.

For classic video surveillance and video observation, this means that it is possible to keep a very large spatial contexts in view in high resolution on just one screen. If necessary, system operators can open any number of zoom windows with a click of the mouse. Thus, even complex situations remain under complete control. The ultimate effect is practically the same as that of a theoretically limitless number of “virtual“ PTZ systems but without ever losing the overall picture. For tracking during surveillance, the MFS technology has the advantage that it records the entire image area at a previously defined minimum resolution, with the result that no information is lost, e.g., for forensic analysis. Finally, for analytics, MFS technology provides a constant minimum quality of data of the entire object space using significantly fewer systems.

The MFS technology offers the advantage that all image areas are recorded in the previously defined minimum resolution, so no information is lost.

Josua Braun, Marketing Director, Dallmeier Electronic

Undeniably, conventional technologies still have functions in which they are unsurpassed – such as extremely highly detailed resolution requirements which can only be satisfied with PTZ systems, or situations in which an expansive general overview without details in high resolution is sufficient. However, when the two requirements are combined – and particularly when the objective is to reproduce large regions in a defined minimum resolution over the entire area – the advantages of multifocal sensor technology truly become apparent. Security managers, e.g., in medium-sized and large enterprises, stadium operators or urban administrators can capture extensive areas with a substantially smaller number of systems. On average, the manufacturer Dallmeier expects to be able to replace up to 24 single cameras with one MFS system. This in turn reduces overall costs – particularly through savings on infrastructure and installation and operating costs – to a minimum.

>The foregoing article first published in: Protector 9/2020