Newsletter

Exclusive expert tips, customer stories and more.

AI-based video analysis holds the promise of a technological quantum leap, with significant customer benefits. But only if the competent – i.e., informed – user can appraise the technology correctly. The following blog article would like to convey some basics in order to be able to correctly assess the functionality, usability and benefit for one’s own specific application.

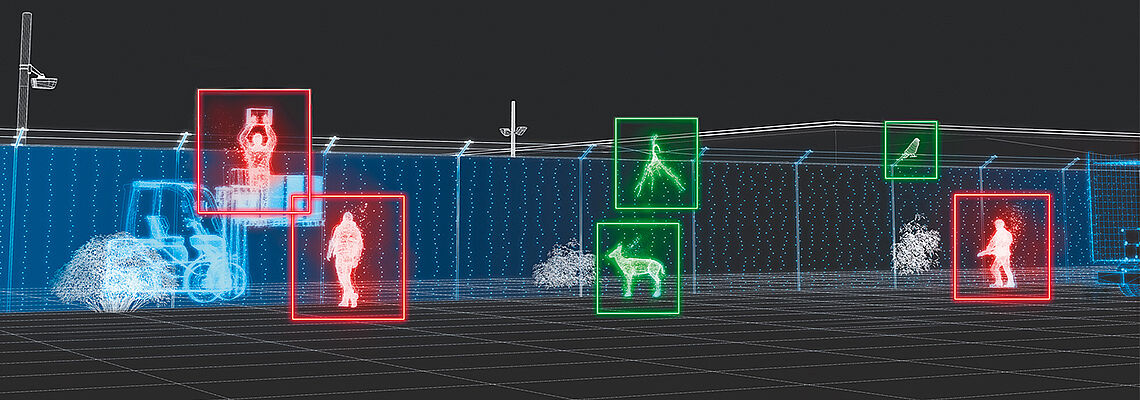

Routines based on Artificial Intelligence (AI) have been growing increasingly widespread in video security technology for a long time. A steadily growing number of new applications and products rely on the algorithms to offer new analyses or make existing analyses significantly more reliable. The objective is to provide appreciable added value for users, and the results speak for themselves: Not too long ago, a great deal of work was required before classic image processing was able to recognise a tree moving in the wind as a false alarm, to give just one example. Today AI does that effortlessly.

The essential point of distinction between image or video analyses with classic image processing and those which use Artificial Intelligence is that algorithms are no longer “just“ programmed, they can also be “taught“ with the aid of large volumes of data. On the basis of this data, the system learns to detect patterns and accordingly recognise the difference between a tree and an intruder, for example. But the concept of machine learning also throws up new problems and challenges. One well-known example of this is the difference in the quality of recognition of different ethnic groups, an issue which has even made headlines in the news. Yet the background is relatively simple: An AI system can only learn substantially if it is supplied with enough and sufficiently diverse and evenly distributed data.

All of this leads to the question of the performance capability of a system that uses Artificial Intelligence. What metrics allow a comparison between two routines, two different systems or two manufacturers, for example? What does it mean when a product brochure promises e.g., “95 percent detection accuracy“ or “reliable recognition“? How good is accuracy of 95%? And what is “reliable recognition“?

First of all, it is most important to understand how AI routines can be evaluated. The first step is the application- and customer-specific definition of what “incorrect“ and “correct“ mean, especially in borderline cases: For example, in a system set up to recognise persons, is a detection to be defined as correct if the image or video does not even show a real person, but instead just an advertisement hoarding depicting a person? This and other parameters must be defined. As soon as this definition has been established, a dataset is needed in which the results that are expected to be correct are known. This dataset will then be analysed with AI to deduce the percentages of correct and incorrect detections. In this process, mathematics provides the user with an exceptionally wide variety of metrics, such as sensitivity (percentage of expected detections which were actually detected) or precision (percentage of detections that are actually correct). Ultimately, therefore, the “quality“ of AI is always a statistical statement about the evaluation dataset used.

Statements about the quality of an AI analysis should be viewed with caution if not all parameters are known.

Dr. Maximilian Sand, Teamleader Artificial Intelligence, Dallmeier electronic

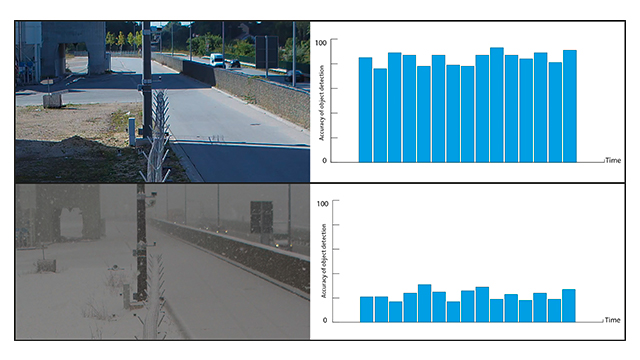

How usable this statement really is for the user or potential purchaser of a system depends on the distribution of the dataset. Accordingly, an evaluation may attest to good recognition performance. But if the dataset was founded solely on image material from summer months, this evaluation has no validity regarding the quality of the AI in winter, since light and weather conditions may be very different. In general, it follows that statements about the quality of an AI analysis – particularly those quoting specific figures, such as “99.9 %“ – are to be treated with caution if not all parameters are known. If the dataset used, the metrics used and the other parameters are unknown, in fact it is no longer possible to make a definitive statement about how representative the result is.

Every system has its limits, and of course this is also true of AI systems. Therefore, knowing these limits is the fundamental prerequisite for making sound decisions. But here too, statistics and reality collide, as is illustrated in the following example: Logically, the smaller an object is in the image/video, the less well an AI system is able to recognise it. So, the first question the user asks himself before buying a system relates to the maximum distance at which objects can be detected. Because this has implications for the number of cameras needed and thus also for the costs of the total system. But it is quite impossible to specify an exact distance. There is simply no value up to which the analysis delivers 100% correct results, nor another value above which recognition is entirely impossible. In this case, an evaluation is only able to return statistics. For example, detection accuracy as a function of object size.

Regarding the system limits, it is conventional practice, e.g., in product datasheets, to describe the limits of the system using specific minimum and maximum values to the extent possible. These include for example the minimum distance or a minimum resolution. This is also expedient, because customers or installers need points of reference to enable them to rate the system. Even so, of course there are still many unknowns – e.g., whether the manufacturer was inclined conservatively or more optimistically when specifying these limit values. So, the user is well advised always to bear in mind that there are no well-defined, clear limits in video analysis. For all systems, errors will inevitably occur even within certain parameters, and at the same time useful results can be returned outside of the limits under favourable conditions.

If one wishes to find out the true quality of an AI-based analysis as a user, this is really only possible by carrying out a direct comparison – the figures and parameters quoted by the various manufacturers differ too widely. Furthermore, of course the boundary conditions and the input must be identical for all systems. The optimum option for this is a live test, with demo products, rented equipment or the like. Then, the performance capability of the system in exactly the usage case required is also revealed. Incidentally, this also describes the yardstick used to evaluate the performance capabilities of AI systems generally: It all depends on the specific usage case. This should be specified as precisely as possible. Only then is it also possible to generate true added value for the customer based on the right solution.

Download Blog Article and Illustrations

Do you have any questions? Or would you like to share your thoughts on this subject with us?

We welcome you to post your comments and remarks!