Newsletter

Exclusive expert tips, customer stories and more.

Video technology continues to evolve at a dramatic pace: Besides optical evidence of events, the capabilities in terms of automated or semi-automated analysis of image data are proliferating. In such an environment, it is not always easy to keep a clear view of developments, as new solutions crowd onto the market at a furious pace, and many more systems are yet in the research and experimental stages. This brief article is intended to show how decision makers can avoid making expensive mistakes. The most serious of which do not even concern the analysis systems themselves.

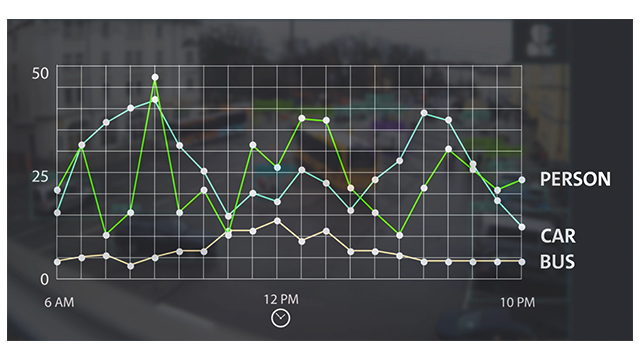

Generally speaking, as “optical sensors“, cameras are superbly designed to capture data for analysis: There are few better ways to extract an enormous diversity of data from complex contexts and situations with relatively little effort than a video image. The possibilities arising from video analysis are many and varied: “Crowd Analyses“ for counting people or objects, “Appearance Search“ for finding people based on certain features, various “Intrusion Detection“ systems for safeguarding “Sterile Areas“ in the area around stadiums for example, or along the perimeters of Critical Infrastructures, and much more. Most systems today work with an object classification system based on neural networks, which is often equated in popular speech with “Artificial Intelligence“.

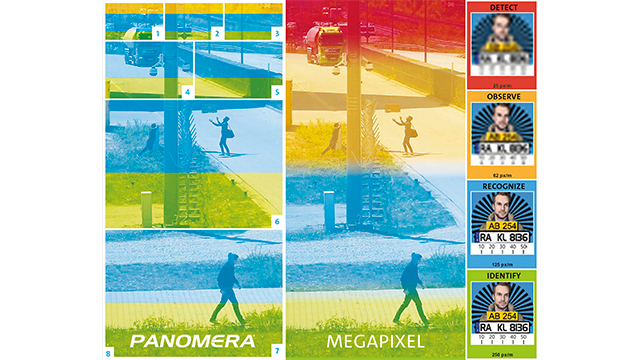

But in all the excitement, one thing is very often underestimated: the importance of data quality. Indeed, one mistake which is often made is that of only considering and evaluating the analysis system, not the total solution. As a consequence, many users who “simply hung up a couple of cameras“ and then “run“ analyses on the image material they obtain this way are disappointed. Even though, as expressed in the old adage “Quality In, Quality Out“, the quality of the analysis results can only ever be as good as the input data, which depends on the quality of the image. Image quality is defined in DIN EN 62676-4 as “pixels per metre (px/m)“, and is the most important parameter for any video system: regardless if 250 px/m are required for a judge to be 100% certain in identifying an individual or whether 62.5 px/m are required for AI-based object detection of objects such as people, bicycles, cars or animals.

This is why the key to success lies in reliably delivering the minimum value required for a specific form of analysis, e.g., to distinguish between people and vehicles over the entire recorded area. This is only possible if a manufacturer possesses the appropriate tools and planning systems as well as the expertise and the experts to put these plans into practice. Moreover, camera technologies are needed which are designed to assure these minimum resolutions over large expanses as well. Even ultra-high-resolution megapixel cameras very quickly reach their performance limits particularly in the more distant areas of the image, or they are uneconomical for large areas. By their nature, PTZ cameras are not suitable for analysing broader relationships at all, because they each focus only on a certain region of the area and are primarily used for active video observation. Modern, "multifocal sensor systems", in which multiple sensors with different focal lengths are combined in a single system, in contrast allow a precisely defined minimum resolution over the entire area to be captured, including in large spatial contexts. These “MFS”-systems most often represent the most cost-effective approach at the same time as well as fewer systems are required to meet the necessary resolution across the entire area.